Overview

Are you ready to revolutionize your enterprise’s agility and drive unprecedented growth? SAFeTM, the Scaled Agile Framework, is a proven methodology for implementing Agile practices at scale within organizations. It provides guidance to aligning teams, coordinating work, and delivering value in a more streamlined and predictable manner across entire enterprises.

By emphasizing cross-functional collaboration, iterative development, and continuous improvement, SAFe enables organizations to respond rapidly to changing market conditions, deliver high-quality products and services, and ultimately achieve greater business agility and success.

Services

Our SAFe (Scaled Agile Framework) solutions are tailored to propel your organization to new heights, aligning teams, strategies, and operations for maximum impact.

Assessment/ Discovery

Identify inefficiencies and misalignment, document a case for change and build a tailored, comprehensive action plan to achieve business outcomes

Workshops & Training

Empower teams and executives through immersive learning and certifications (both Scaled Agile certified and Cprime’s own) to effectively implement and scale agile practices within their organizations

Consulting

Align strategies with operations and drive digital transformation; Hands-on support to build collaboration and initiative delivery, and streamline portfolio management

Team Augmentation

Enhance your team’s capabilities with skilled professionals, boosting productivity, efficiency, and overall project success

How We Can Help

As industry pioneers and leaders in SAFe implementation, our global roster of top industry experts, including 3 SAFeTM Fellows (Ken France, Darren Wilmshurst, and Isaac Montgomery), brings unparalleled experience and expertise to every engagement. Every single initiative, workshop and course is focused on realizing measurable impact, bringing increments of value in bite size chunks. From strategy workshops to hands-on coaching, we work with organizations to craft unique adoption strategies that drive towards sustainable growth and success.

As SAFe experts with 10+ years as trusted SAFe platform and transformation partners, we delivered outcomes for clients such as:

Trusted Experts and Partners

Our skills and experience, along with having the most SPCTs than any other Scaled Agile partner, make us the best choice for organizations looking to transform their ways of working and deliver value faster.

Benefits of Adopting the SAFe Framework

By enabling organizations to effectively scale new ways of working beyond individual teams to entire enterprises, the SAFe framework can help organizations achieve greater business agility, adaptability, and resilience in today’s rapidly changing and competitive market landscape.

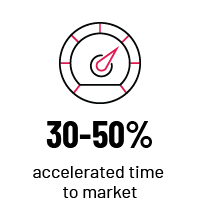

Accelerated Time to Market

Optimize processes and workflows to deliver value to customers more rapidly and gain a competitive edge

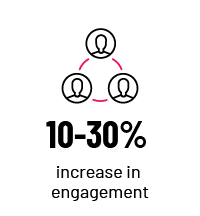

Enhanced Collaboration & Alignment

Align teams, strategies, and objectives to foster cross-functional collaboration between business and IT, driving better outcomes.

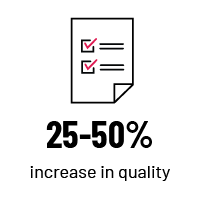

Cross-Portfolio Visibility & Alignment

Drive clear understanding and coordination across projects and teams, ensuring all efforts support overarching strategic goals

Customer Success Story

Upgrading the Enterprise: A SAFe© Portfolio Transformation at a Global P&C Insurance Provider

Faced with changing industry requirements and the challenges of profitably bringing complex products to market, this global insurance provider turned to Cprime to help align their product portfolios and assist with their Lean/Agile transformation at the highest organizational level.

More Insights

Blogs, whitepapers, webinars, and more.

SAFe® Transformation with Cprime: Tools to Consider Before You Start

How to embrace the disruption that SAFe® Transformation brings to your teams and create a sustainable enterprise-wide value? The decision…

How to Manage Unplanned Work in SAFe?

At the time of this writing, which is early-2023, Scaled Agile Framework® (SAFe) remains the most popular framework in use…

SAFe Coaches Handbook:

Proven tips and techniques for launching and running SAFe® Teams, ARTs, and Portfolios in an Agile Enterprise by Darren Wilmshurst, Director, Agility COE and SAFe Fellow